What is Docker? Why is it important and necessary for developers? Part I

What is Docker and What Containerization Means?

As you know, ships are the leading way to distribute goods around the world. Previously, the cost of transportation was quite high, because each cargo had its own shape and type of material. Uploading a bag of fish or a car to a ship is a different task requiring different processes and tools. There were problems with loading methods that required a variety of cranes and tools. And to pack cargo expertly on the ship itself, given its fragility, this is not a trivial task.

But at some point, everything changed. Containers leveled all types of cargo and standardized loading and unloading tools around the world, which in turn, led to a simplification of processes, acceleration, and, consequently, lower costs. This process of containerization or the creation of a container system, when intermodal freight transportation began to use intermodal containers, was launched from the 60s of the 20th century and entailed complete changes in the logistics system.

The same thing happened in software development. Docker has become a universal software delivery tool, regardless of its structure, dependencies, or installation method. All that is needed for programs distributed through Docker is inside the image and does not intersect with the primary system and other containers. The importance of this fact cannot be overestimated. Now updating software versions does not involve either the system itself or other programs. Nothing can break anymore. All you need to do is download a new image of the program you want to update. In other words, Docker removed the dependency hell problem and made the infrastructure immutable.

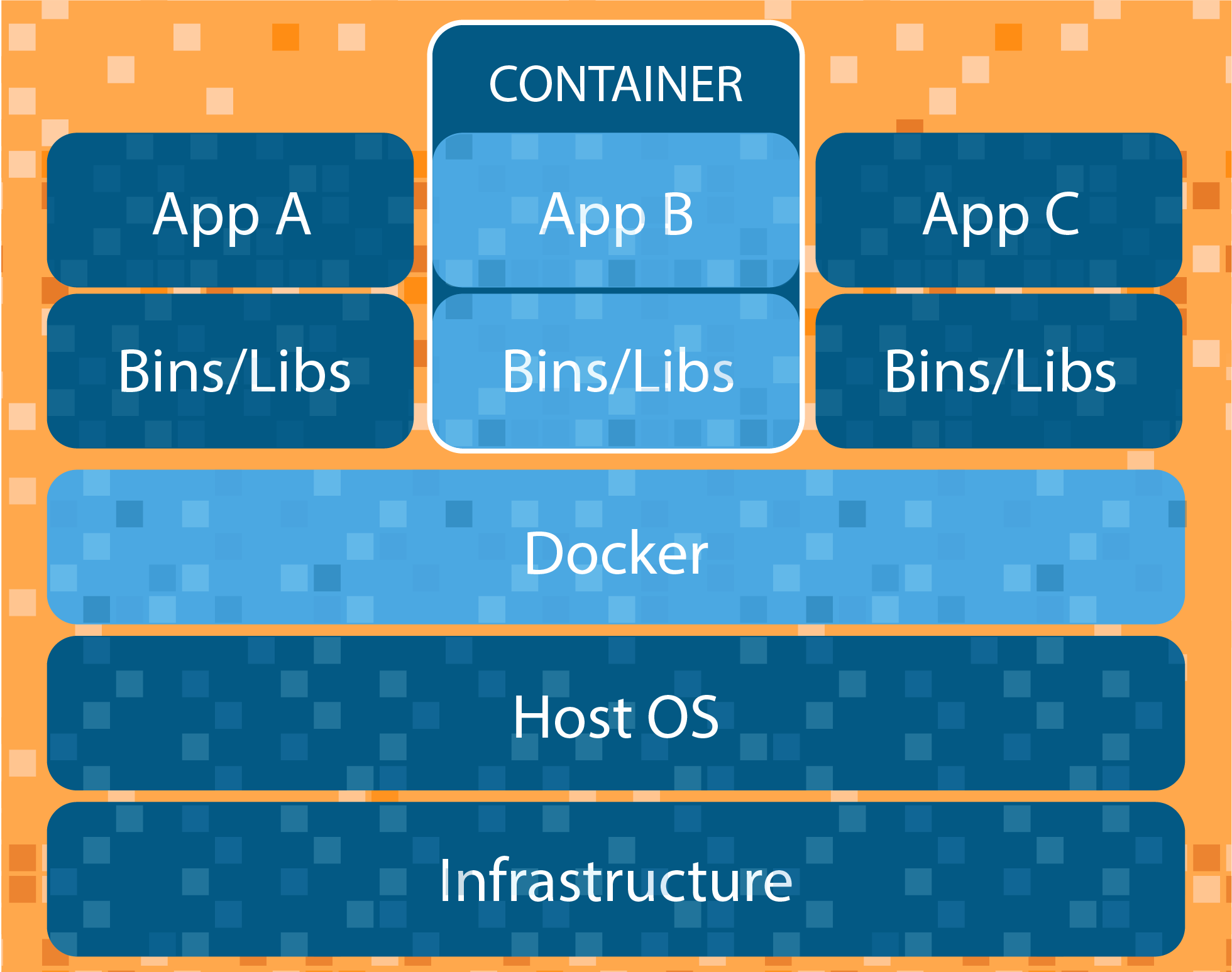

Docker is a tool designed to make it easier to create, deploy, and run applications by using containers. Containers allow a developer to package up an application with all of the parts it needs, such as libraries and other dependencies, and ship it all out as one package. By doing so, thanks to the container, the developer can rest assured that the application will run on any other Linux machine regardless of any customized settings that machine might have that could differ from the machine used for writing and testing the code.

In a way, Docker is a bit like a virtual machine. But unlike a virtual machine, rather than creating a whole virtual operating system, Docker allows applications to use the same Linux kernel as the system that they're running on and only requires applications be shipped with things not already running on the host computer. This gives a significant performance boost and reduces the size of the application.

And importantly, Docker is open source. This means that anyone can contribute to Docker and extend it to meet their own needs if they need additional features that aren't available out of the box.

Docker is a tool that is designed to benefit both developers and system administrators, making it a part of many DevOps (developers + operations) toolchains. For developers, it means that they can focus on writing code without worrying about the system that it will ultimately be running on. It also allows them to get a head start by using one of thousands of programs already designed to run in a Docker container as a part of their application. For operations staff, Docker gives flexibility and potentially reduces the number of systems needed because of its small footprint and lower overhead.

Things you should know about Docker:

- Docker is not LXC.

- Docker is not a Virtual Machine Solution.

- Docker is not a configuration management system and is not a replacement for chef, puppet, Ansible etc.

- Docker is not a platform as a service technology.

Docker Components:

- Docker is composed of the following four components

- Docker Client and Daemon

- Images

- Docker registries

- Containers

Docker architecture

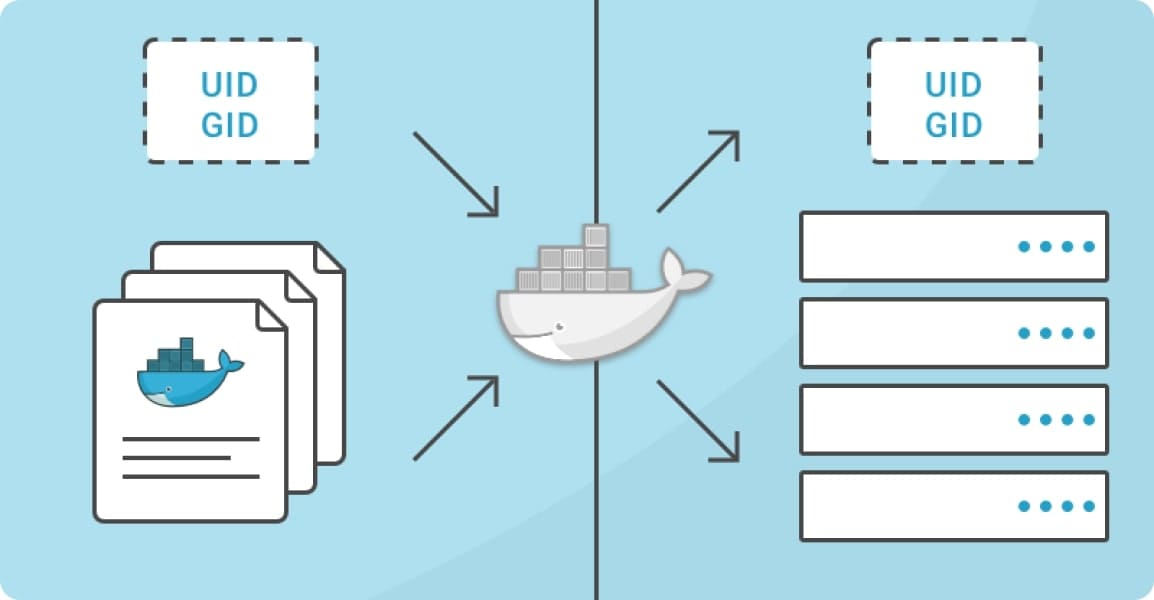

Images are the basic building blocks of Docker. Containers are built from images. Images can be configured with applications and used as a template for creating containers. It is organized in a layered fashion. Every change in an image is added as a layer on top of it.

Docker registry

Is a repository for Docker images. Using Docker registry, you can build and share images with your team. A registry can be public or private. Docker Inc provides a hosted registry service called Docker Hub. It allows you to upload and download images from a central location. If your repository is public, all your images can be accessed by other Docker hub users. You can also create a private registry in Docker Hub, Google Cloud Registry, Amazon Registry etc. Docker hub acts like git, where you can build your images locally on your laptop, commit it and then can be pushed to the Docker hub.

Container

Is the execution environment for Docker. Containers are created from images. It is a writable layer of the image. You can package your applications in a container, commit it and make it a golden image to build more containers from it. Two or more containers can be linked together to form tiered application architecture. Containers can be started, stopped, committed and terminated. If you terminate a container without committing it, all the changes made to the container will be lost.

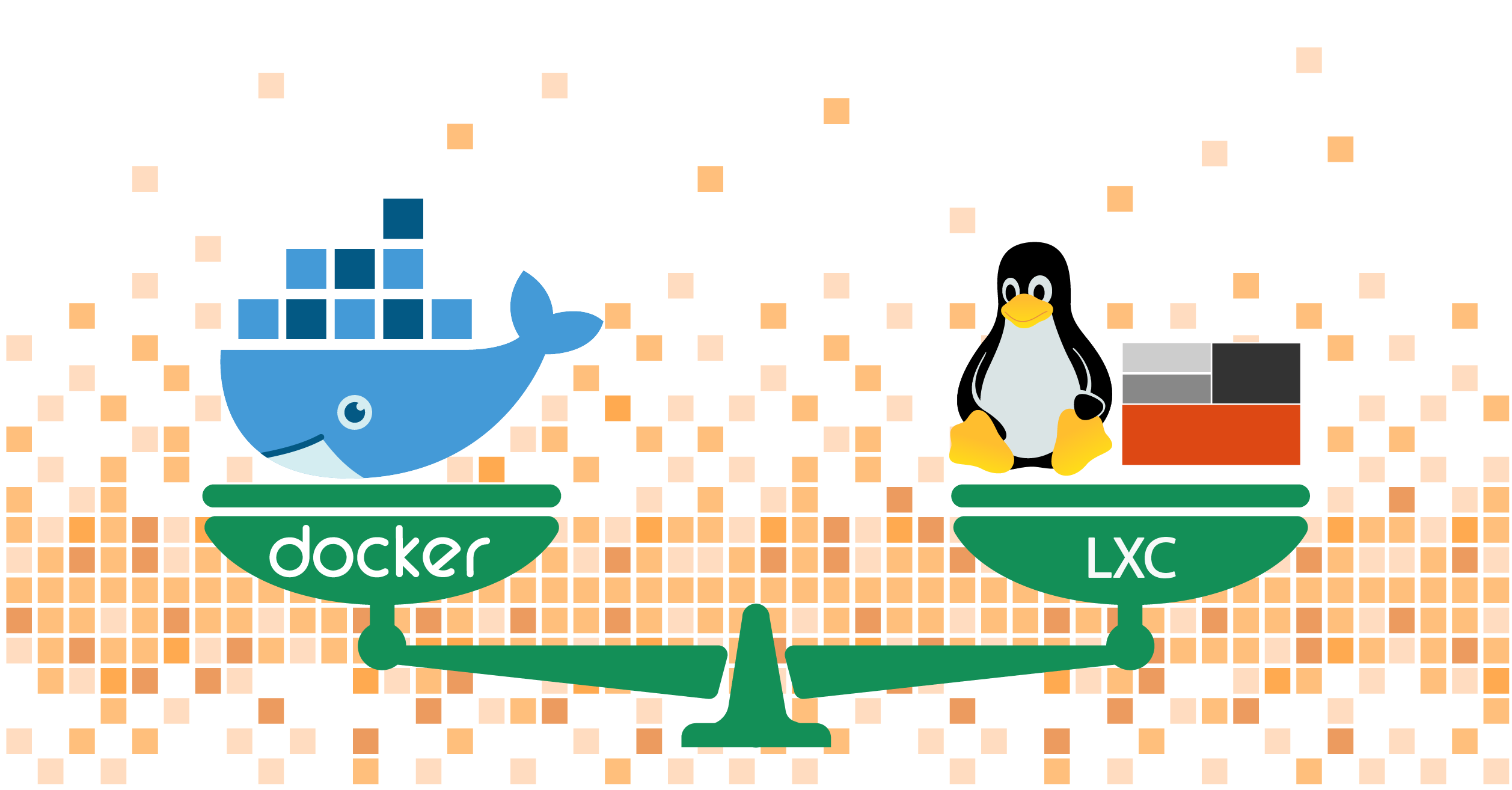

From LXC to Docker

The original Linux container technology is called Linux Containers, or LXC. LXC is an OS-level virtualization method designed to run multiple isolated Linux systems on a single host.

Containers separate applications from operating systems. This means that users have a clean, minimal Linux OS, and you can run all processes in one or more isolated containers. Since the operating system is separate from the containers, you can move the container to any Linux server that supports the container's operating environment. Docker, which began as a project to build LXC containers for a single application, seriously changed LXC and made containers more portable and flexible.

The main differences between Docker and LXC:

1. A single process vs many processes

Docker restricts containers to make them work as a single process. If your application environment consists of X concurrent processes, Docker will launch X containers, each with its own process. Unlike Docker, LXC containers can run many processes. To run a simple multi-level web application in Docker, you will need a PHP container, a Nginx container web server, a MySQL container for the database process, and several data containers to store database tables and other application information.

Single-process containers have many benefits, including simpler and smaller updates. You do not need to kill the database process when you want to update only the webserver. Also, single-process containers have an efficient architecture for building microservice-based applications. Single-process containers also have limitations. For example, you cannot run agents, registration scripts, or automatically running SSH processes inside a container. It is also not easy to slightly upgrade a single-process container at the application level. You will have to launch a newly updated container.

2. Structurelessness vs structural

Docker containers are designed to be more structureless than LXC.

First, Docker does not support external storage. Docker circumvents this by allowing you to mount host storage as a Docker volume from your containers. Since quantities are attached, they are not considered part of the container environment.

Secondly, Docker containers consist of layers in reading mode. This means that as soon as the image of the container is created, it does not change. During program execution, if the process in the container changes its internal state, a difference is created between the internal state and the image from which the container was created.

If you run the docker commit command, the difference between the two versions becomes part of the new image, but not the original, but the new one from which you can create new containers. If you delete the container, the version difference will disappear.

A structureless container is an unusual entity. You can update the container, but a series of updates will create a series of new container images, so it is so easy to roll back in the system.

3. Portability

Perhaps this is the most critical advantage of Docker over the LXC. Docker separates network resources, storage, and OS details more than LXC. With Docker, the application is really independent of the settings of these low-level resources. When you move a Docker container from one Docker host to another Docker machine, Docker ensures that the environment for the application remains unchanged.

A direct advantage of this approach is that Docker helps programmers create local development environments that look like a production server. When the programmer finishes writing and begins to test the code, he can wrap it in a container, publish it directly on the server or in a private cloud, and he will immediately work since this is the same environment.

With LXC, a programmer can run something on his machine, but find that the code does not work correctly when deployed to a server. The server environment will be different, and the programmer will have to spend a lot of time to fix this difference and fix the problem. With Docker, there are no such problems.

4. Architecture created for developers

Separating applications from the underlying hardware is the fundamental concept of virtualization. Containers go even further and separate applications from the OS. Thanks to this feature, programmers get flexibility and scalability during development.

What else Docker replaced?

It is worth listing the technologies that have become redundant when working with infrastructure after the appearance of Docker. Of course, for other applications, these technologies are still indispensable, here's how, for example, to replace SSH or Git, used for other purposes?

Chef / Puppet / Ansible

A concise and fast Dockerfile replaces the stateful server configuration management system. Since Docker can assemble the container image in seconds, and launch the container in milliseconds, you no longer need to pile up scripts that keep the heavy and expensive server up to date. It is enough to start a new container and pay off the old one. If you used Chef to deploy stateless servers before Docker arrived quickly, then you probably already love Docker.

Upstart / SystemD / Supervisor / launchd / God.rb / SysVinit

Archaic service launch systems based on static configurations just go away. In their place comes docker daemon, which is an init-like process that can monitor running services, restart failed ones, store exit codes, logs, and most importantly, download any containers with any services on any machine, no matter what role the machine has.

Ubuntu_18.04.iso / AMI-W7FIS1T / apt-get update

Like vagrant, docker finally allows us to forget about downloading the long-obsolete installation DVD-images, remember the control panels of different cloud platforms, and lament that they never have the latest ubuntu. And most importantly, you can launch your own custom image with everything you need in a couple of minutes, collecting it on your local machine and downloading it to the free Docker Hub cloud. And no more apt-get update after installation - your images will always be ready to work immediately after assembly.

RUBY_ENV, database.dev.yml, testing vs. staging vs. backup

Since docker allows you to run containers with all the dependencies and a bit-by-bit environment that matches what you configured, you can pick up an exact copy of the production directly on your computer in a couple of seconds. All operating systems, all database and caching servers, the application itself, and the library versions it uses, all this will be accurately reproduced on the development machine or on the tester machine. If you are always dreamed of having a backup production server ready for battle in the event of a fall in the main one - docker-compose up, you have two battle servers that are copies of each other with accuracy to bits. Starting with docker version 1.10, container identifiers are their own SHA256 signature guaranteeing identity.

And also note that such a powerful tool as Docker is fully consistent with the methodology of 12factor, which allows to create software as a service with a number of advantages.

SSH (sic!), VPN, Capistrano, Jenkins-slave

To start the container, you do not need to get root on the server, fool around with the keys, and configure the deployment system. With Docker, deployment looks like building and configuring a physical server at home, further comprehensively testing it, and then magically moving it to an arbitrary number of data centers with one command. To do this, just run a Docker run on a laptop, and the Docker itself will connect to the server via TLS and start everything. It’s also easier to get inside the container: docker exec -it %containername% bash, and you have a debugging console in your hands. You can even drive rsync through the docker tunnel like this: rsync -e 'docker exec -i' --blocking-io -rv CONTAINER_NAME: / data., The main thing to remember is to add --blocking-io.

No Vendor lock

That's all, he is no more. Do you want to move the entire application in a couple of minutes to another continent, and want to spread your application around the globe. Containers don't care where to spin, and they are connected by a virtual network, they see and hear each other from everywhere, including a development machine in case of live debugging.

This is partial information about such a powerful tool as Docker, and in our next article, you can get acquainted with all the goodies of Docker, find out what Dokku is and how we use these tools in our practice.